H.264, also the tenth part of MPEG-4, was proposed by the Joint Video Team (JVT, Joint Video Team) formed by the ITU-T Video Coding Expert Group (VCEG) and the ISO/IEC Moving Picture Expert Group (MPEG). The highly compressed digital video codec standard. This standard is usually called H.264/AVC (or AVC/H.264 or H.264/MPEG-4 AVC or MPEG-4/H.264 AVC) and clearly states its developers.

The main parts of the H264 standard include Access Unit delimiter (access unit delimiter), SEI (additional enhancement information), primary coded picture (basic image coding), and Redundant Coded Picture (redundant image coding). There are also Instantaneous Decoding Refresh (IDR, instant decoding refresh), Hypothetical Reference Decoder (HRD, hypothetical reference decoding), Hypothetical Stream Scheduler (HSS, hypothetical stream scheduler).

1. Background

H.264 is a new generation of digital video compression format after MPEG4 jointly proposed by the International Organization for Standardization (ISO) and the International Telecommunication Union (ITU). H.264 is one of the ITU-T video coding and decoding technology standards named after the H.26x series. H.264 is a digital video coding standard developed by the joint video team (JVT: joint video team) of ITU-T's VCEG (Video Coding Expert Group) and ISO/IEC's MPEG (Moving Picture Coding Expert Group). This standard originally came from the development of the ITU-T project called H.26L. Although the name H.26L is not very common, it has been used all the time. H.264 is one of the ITU-T standards named after the H.26x series, and AVC is the name of the ISO/IEC MPEG side.

There are two international organizations that formulate video codec technology, one is "International Telecommunication Union (ITU-T)", which has developed standards such as H.261, H.263, H.263+, etc., and the other is "International Telecommunication Union". The Organization for Standardization (ISO) has established standards such as MPEG-1, MPEG-2, and MPEG-4. H.264 is a new digital video coding standard formulated by the Joint Video Team (JVT) jointly established by two organizations, so it is both ITU-T's H.264 and ISO/IEC's MPEG-4 advanced video Part 10 of Coding (Advanced Video Coding, AVC). Therefore, whether it is MPEG-4 AVC, MPEG-4 Part 10, or ISO/IEC 14496-10, they all refer to H.264.

2. H.264 is established on the basis of MPEG-4 technology, and its encoding and decoding process mainly includes 5 parts:

(1) Inter-frame and intra-frame prediction (Estimation)

(2) Transform and inverse transformation

(3) Quantization and dequantization

(4) Loop Filter (Loop Filter)

(5) Entropy Coding

The main goal of the H.264 standard is to provide better image quality under the same bandwidth compared with other existing video coding standards. Through this standard, the compression efficiency under the same image quality is increased by about 2 times compared with the previous standard (MPEG2).

H.264 can provide 11 levels and 7 categories of sub-protocol formats (algorithms), where the level definition is to limit the external environment, such as bandwidth requirements, memory requirements, network performance, and so on. The higher the level, the higher the bandwidth requirement and the higher the video quality. The category definition is for a specific application, defining a subset of features used by the encoder, and standardizing the complexity of the encoder in different application environments.

3. Advantages

(1) Low Bit Rate

Compared with compression technologies such as MPEG2 and MPEG4 ASP, under the same image quality, the data volume compressed by H.264 technology is only 1/8 of MPEG2 and 1/3 of MPEG4.

(2) High-quality images

H.264 can provide continuous and smooth high-quality images (DVD quality).

(3) Strong fault tolerance

H.264 provides the necessary tools to solve errors such as packet loss that are prone to occur in an unstable network environment

(4) Strong network adaptability

H.264 provides a Network Abstraction Layer, which enables H.264 files to be easily transmitted on different networks (such as the Internet, CDMA, GPRS, WCDMA, CDMA2000, etc.).

The biggest advantage of H.264 is that it has a very high data compression ratio. Under the same image quality, the compression ratio of H.264 is more than 2 times that of MPEG-2 and 1.5 to 2 times that of MPEG-4. For example, if the size of the original file is 88GB, it will become 3.5GB after being compressed by the MPEG-2 compression standard, and the compression ratio will be 25:1, while it will become 879MB after being compressed by the H.264 compression standard, from 88GB to 879MB. The compression ratio of H.264 reaches an astonishing 102:1. Low bit rate (Low Bit Rate) plays an important role in the high compression ratio of H.264. Compared with compression technologies such as MPEG-2 and MPEG-4 ASP, H.264 compression technology will greatly save users' download time. And data traffic charges. It is especially worth mentioning that H.264 has a high compression ratio while also having high-quality and smooth images. Because of this, H.264-compressed video data requires less bandwidth during network transmission. It is also more economical.

4. Features

The main features of the H264 standard are as follows:

(1) Higher coding efficiency

Compared with the special rate efficiency of standards such as H.263, it can save more than 50% of the code rate on average.

(2) High-quality video images

H.264 can provide high-quality video images at low bitrates, and it is the highlight of H.264's application to provide high-quality image transmission on lower bandwidths.

(3) Improve network adaptability

H.264 can work in real-time communication applications (such as video conferencing) in low-latency mode, and can also work in video storage or video streaming servers without delay.

(4) Adopt a mixed coding structure

Same as H.263, H.264 also uses a hybrid coding structure that uses DCT transform coding plus DPCM differential coding. It also adds multi-mode motion estimation, intra-frame prediction, multi-frame prediction, content-based variable length coding, 4x4 New coding methods such as two-dimensional integer transformation have improved coding efficiency.

(5) H.264 has fewer encoding options

When encoding in H.263, it is often necessary to set a lot of options, which increases the difficulty of encoding, while H.264 achieves the "return to basics" that strives for simplicity and reduces the complexity of encoding.

(6) H.264 can be applied in different occasions

H.264 can use different transmission and playback rates according to different environments, and provides a wealth of error handling tools, which can well control or eliminate packet loss and error codes.

(7) Error recovery function

H.264 provides a tool to solve the problem of network transmission packet loss, and is suitable for transmitting video data in a wireless network with high bit error rate transmission.

(8) Higher complexity

The performance improvement of 264 comes at the cost of increased complexity. It is estimated that the computational complexity of H.264 encoding is about 3 times that of H.263, and the decoding complexity is about 2 times that of H.263.

5. Technology

H.264, like the previous standard, is also a hybrid coding mode of DPCM plus transform coding. However, it adopts a concise design of "return to basics", without many options, and obtains much better compression performance than H.263++; it strengthens the adaptability to various channels, and adopts a "network-friendly" structure and grammar. Conducive to the processing of errors and packet loss; a wide range of application targets to meet the needs of different speeds, different resolutions, and different transmission (storage) occasions.

Technically, it concentrates the advantages of previous standards and absorbs the experience accumulated in standard formulation. Compared with H.263 v2 (H.263+) or MPEG-4 simple profile (Simple Profile), H.264 can save up to 50 at most code rates when using the best encoder similar to the above encoding method % Bit rate. H.264 can continue to provide high video quality at all bit rates. H.264 can work in low-latency mode to adapt to real-time communication applications (such as video conferencing), and at the same time it can work well in applications without delay restrictions, such as video storage and server-based video streaming applications . H.264 provides the tools needed to deal with packet loss in the packet transmission network, as well as the tools to deal with bit errors in the error-prone wireless network.

At the system level, H.264 proposes a new concept, which is a conceptual division between the Video Coding Layer (VCL) and the Network Abstraction Layer (NAL), the former being the core of the video content The expression of compressed content, the latter is the expression of delivery through a specific type of network, this structure facilitates the packaging of information and better priority control of information.

(1) Encoding

①Intra-frame prediction coding

Intra-frame coding is used to reduce the spatial redundancy of the image. In order to improve the efficiency of H.264 intra-frame coding, the spatial correlation of adjacent macroblocks is fully utilized in a given frame, and adjacent macroblocks usually contain similar attributes. Therefore, when encoding a given macroblock, first predict based on the surrounding macroblocks (typically based on the upper left macroblock, the left macroblock, and the upper macroblock, because this macroblock has already been encoded), and then The difference between the predicted value and the actual value is encoded, so that compared to directly encoding the frame, the code rate can be greatly reduced.

H.264 provides 9 modes for 4×4 pixel macroblock prediction, including 1 DC prediction and 8 directional predictions. In the figure, a total of 9 pixels from A to I of the adjacent block have been coded and can be used for prediction. If we choose mode 4, then the 4 pixels a, b, c, and d are predicted to be equal to E Values, e, f, g, and h4 pixels are predicted to be equal to F. For flat areas in the image that contain little spatial information, H.264 also supports 16×16 intra-frame coding.

②Inter-frame predictive coding

Inter-frame predictive coding uses temporal redundancy in consecutive frames for motion estimation and compensation. H.264 motion compensation supports most of the key features in the previous video coding standards, and flexibly adds more functions. In addition to supporting P frames and B frames, H.264 also supports a new inter-stream transmission Frame-SP frame, as shown in Figure 3. After the SP frame is included in the code stream, it can quickly switch between code streams with similar content but with different bit rates. It also supports random access and fast playback modes. H.264 motion estimation has the following 4 characteristics.

Ⅰ. Segmentation of macroblocks of different sizes and shapes

The motion compensation for each 16×16 pixel macroblock can adopt different sizes and shapes, and H.264 supports 7 modes. The motion compensation of the small block mode improves the performance of the motion detailed information processing, reduces the block effect, and improves the image quality.

Ⅱ High-precision sub-pixel motion compensation

In H.263, half-pixel precision motion estimation is used, while in H.264, 1/4 or 1/8 pixel precision motion estimation can be used. When the same accuracy is required, the residual error of H.264 using 1/4 or 1/8 pixel precision motion estimation is smaller than that of H.263 using half-pixel precision motion estimation. In this way, under the same accuracy, H.264 requires a smaller bit rate in inter-frame coding.

Ⅲ Multi-frame prediction

H.264 provides an optional multi-frame prediction function. During inter-frame encoding, 5 different reference frames can be selected to provide better error correction performance, which can improve the video image quality. This feature is mainly used in the following situations: periodic motion, translational motion, and changing the camera lens back and forth between two different scenes.

Ⅳ Deblocking filter

H.264 defines an adaptive filter to remove block effects, which can handle horizontal and vertical block edges in the prediction loop, greatly reducing block effects.

③Integer transformation

In terms of transformation, H.264 uses a DCT-like transformation based on 4×4 pixel blocks, but uses an integer-based spatial transformation. There is no problem of inverse transformation errors due to trade-offs. Compared with floating-point operations, integer DCT transform will cause some additional errors, but because the quantization after DCT transform also has quantization error, compared with it, the influence of quantization error caused by integer DCT transform is not large. In addition, the integer DCT transform also has the advantages of reducing the amount of calculation and complexity, which is conducive to transplantation to fixed-point DSP.

④Quantification

52 different quantization step sizes can be selected in H.264, which is very similar to the 31 quantization step sizes in H.263, but in H.264, the step size is progressive at a compound rate of 12.5%, and Is not a fixed constant.

In H.264, there are also two ways to read transform coefficients: Zigzag scan and double scan. In most cases, a simple zigzag scan is used; dual scan is only used in a block with a smaller quantization level, which helps to improve coding efficiency.

⑤Entropy coding

The last step of the video coding process is entropy coding. Two different entropy coding methods are used in H.264: Universal Variable Length Coding (UVLC) and Text-based Adaptive Binary Arithmetic Coding (CABAC).

In standards such as H.263, different VLC code tables are used according to the type of data to be coded, such as transform coefficients and motion vectors. The UVLC code table in H.264 provides a simple method, no matter what type of data the symbol represents, the uniform variable word length code table is used. The advantage is simplicity; the disadvantage is that a single code table is derived from the probability and statistical distribution model, without considering the correlation between code symbols, and the effect is not very good at medium and high code rates.

Therefore, the optional CABAC method is also provided in H.264. Arithmetic coding enables the use of probabilistic models of all syntactic elements (transform coefficients, motion vectors) on both encoding and decoding. In order to improve the efficiency of arithmetic coding, through the process of content modeling, the basic probability model can adapt to the statistical characteristics that change with the video frame. Content modeling provides conditional probability estimation of coded symbols. Using a suitable content model, the correlation between symbols can be removed by selecting the corresponding probability model of the coded symbols adjacent to the coded symbol. Different syntax elements usually remain different. Model of

The target application of H.264 covers most of the video services, such as cable remote monitoring, interactive media, digital TV, video conferencing, video on demand, streaming media services, etc.

H.264 is to solve the difference of network transmission in different applications. Two layers are defined: the video coding layer (VCL: Video Coding Layer) is responsible for efficient video content representation, and the network abstraction layer (NAL: Network Abstraction Layer) is responsible for packaging and transmitting data in an appropriate manner required by the network (as shown in the figure) Show: the overall framework of the standard).

6. Redundant processing

Compared with previous international standards such as H.263 and MPEG-4, H.264 makes full use of various redundancy, statistical redundancy and visual physiological redundancy in order to achieve efficient compression.

(1) Statistical redundancy

Spectral redundancy (referring to the correlation between color components), spatial redundancy, and temporal redundancy. This is the fundamental difference between video compression and still images. Video compression mainly uses time redundancy to achieve a large compression ratio.

(2) Visual physiological redundancy

The visual physiological redundancy is caused by the characteristics of the human visual system (HVS). For example, the human eye is not sensitive to the high-frequency components of the color components and is not sensitive to the high-frequency components of the brightness components, and is not sensitive to the noise at the high-frequency (ie, details) of the image Wait.

In view of these redundancies, video compression algorithms use different methods to utilize them, but the main consideration is to focus on spatial redundancy and temporal redundancy. H.264 also uses a hybrid structure, that is, separate processing of spatial redundancy and temporal redundancy. For spatial redundancy, the standard achieves the purpose of elimination through transformation and quantization, so the encoded frame is called I frame; while the temporal redundancy is removed through inter-frame prediction, that is, motion estimation and compensation, so that the encoded frame is called P frame or B frame. Unlike previous standards, H.264 uses intra-frame prediction when encoding I frames, and then encodes the prediction error. In this way, the spatial correlation is fully utilized and the coding efficiency is improved. H.264 intra-frame prediction uses 16x16 macroblocks as the basic unit. First, the encoder uses the neighboring pixels of the same frame as the current macroblock as a reference to generate a prediction value for the current macroblock, then transforms and quantizes the prediction residual, and then performs entropy encoding on the transformed and quantized result. The result of entropy coding can form a code stream. Since the reference data available on the decoder side is the reconstructed image after inverse transformation and inverse quantization, in order to make the codec consistent, the reference data used for prediction on the encoder side is the same as that on the decoder side, which is also inversely transformed. And the reconstructed image after inverse quantization.

|

|

|

|

How far(long) the transmitter cover?

The transmission range depends on many factors. The true distance is based on the antenna installing height , antenna gain, using environment like building and other obstructions , sensitivity of the receiver, antenna of the receiver . Installing antenna more high and using in the countryside , the distance will much more far.

EXAMPLE 5W FM Transmitter use in the city and hometown:

I have a USA customer use 5W fm transmitter with GP antenna in his hometown ,and he test it with a car, it cover 10km(6.21mile).

I test the 5W fm transmitter with GP antenna in my hometown ,it cover about 2km(1.24mile).

I test the 5W fm transmitter with GP antenna in Guangzhou city ,it cover about only 300meter(984ft).

Below are the approximate range of different power FM Transmitters. ( The range is diameter )

0.1W ~ 5W FM Transmitter :100M ~1KM

5W ~15W FM Ttransmitter : 1KM ~ 3KM

15W ~ 80W FM Transmitter : 3KM ~10KM

80W ~500W FM Transmitter : 10KM ~30KM

500W ~1000W FM Transmitter : 30KM ~ 50KM

1KW ~ 2KW FM Transmitter : 50KM ~100KM

2KW ~5KW FM Transmitter : 100KM ~150KM

5KW ~10KW FM Transmitter : 150KM ~200KM

How to contact us for the transmitter?

Call me +8618078869184 OR

whatsapp:+86 18319244009

Email me [email protected]

1.How far you want to cover in diameter ?

2.How tall of you tower ?

3.Where are you from ?

And we will give you more professional advice.

About Us

FMUSER.ORG is a system integration company focusing on RF wireless transmission / studio video audio equipment / streaming and data processing .We are providing everything from advice and consultancy through rack integration to installation, commissioning and training.

We offer FM Transmitter, Analog TV Transmitter, Digital TV transmitter, VHF UHF Transmitter, Antennas, Coaxial Cable Connectors, STL, On Air Processing, Broadcast Products for the Studio, RF Signal Monitoring, RDS Encoders, Audio Processors and Remote Site Control Units, IPTV Products, Video / Audio Encoder / Decoder, designed to meet the needs of both large international broadcast networks and small private stations alike.

Our solution has FM Radio Station / Analog TV Station / Digital TV Station / Audio Video Studio Equipment / Studio Transmitter Link / Transmitter Telemetry System / Hotel TV System / IPTV Live Broadcasting / Streaming Live Broadcast / Video Conference / CATV Broadcasting system.

We are using advanced technology products for all the systems, because we know the high reliability and high performance are so important for the system and solution . At the same time we also have to make sure our products system with a very reasonable price.

We have customers of public and commercial broadcasters, telecom operators and regulation authorities , and we also offer solution and products to many hundreds of smaller, local and community broadcasters .

FMUSER.ORG has been exporting more than 15 years and have clients all over the world. With 13 years experience in this field ,we have a professional team to solve customer's all kinds of problems. We dedicated in supplying the extremely reasonable pricing of professional products & services. Contact email : [email protected]

Our Factory

We have modernization of the factory . You are welcome to visit our factory when you come to China.

At present , there are already 1095 customers around the world visited our Guangzhou Tianhe office . If you come to China , you are welcome to visit us .

At Fair

This is our participation in 2012 Global Sources Hong Kong Electronics Fair . Customers from all over the world finally have a chance to get together.

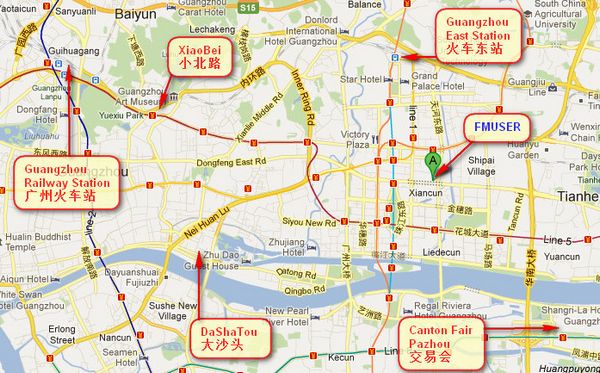

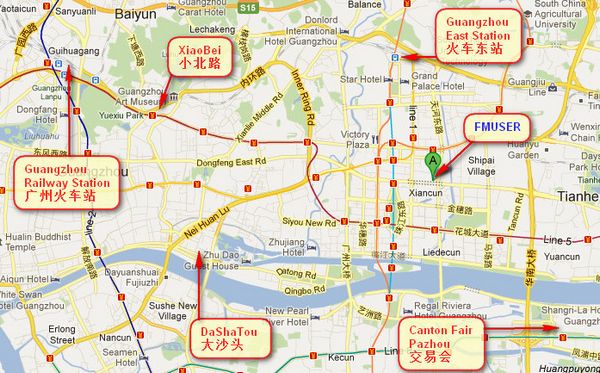

Where is Fmuser ?

You can search this numbers " 23.127460034623816,113.33224654197693 " in google map , then you can find our fmuser office .

FMUSER Guangzhou office is in Tianhe District which is the center of the Canton . Very near to the Canton Fair , guangzhou railway station, xiaobei road and dashatou , only need 10 minutes if take TAXI . Welcome friends around the world to visit and negotiate .

Contact: Kerwin

Cellphone: +8618078869184

whatsapp:+86 18319244009

Wechat: +8618078869184

E-mail: [email protected]

Address: No.305 Room HuiLan Building No.273 Huanpu Road Guangzhou China Zip:510620

|

|

|

|

English: We accept all payments , such as PayPal, Credit Card, Western Union, Alipay, Money Bookers, T/T, LC, DP, DA, OA, Payoneer, If you have any question , please contact me [email protected] or

whatsapp:+86 18319244009

-

PayPal.  www.paypal.com www.paypal.com

We recommend you use Paypal to buy our items ,The Paypal is a secure way to buy on internet .

Every of our item list page bottom on top have a paypal logo to pay.

Credit Card.If you do not have paypal,but you have credit card,you also can click the Yellow PayPal button to pay with your credit card.

---------------------------------------------------------------------

But if you have not a credit card and not have a paypal account or difficult to got a paypal accout ,You can use the following:

Western Union.  www.westernunion.com www.westernunion.com

Pay by Western Union to me :

First name/Given name: Yingfeng

Last name/Surname/ Family name: Zhang

Full name: Yingfeng Zhang

Country: China

City: Guangzhou

|

---------------------------------------------------------------------

T/T . Pay by T/T (wire transfer/ Telegraphic Transfer/ Bank Transfer)

First BANK INFORMATION (COMPANY ACCOUNT):

SWIFT BIC: BKCHHKHHXXX

Bank name: BANK OF CHINA (HONG KONG) LIMITED, HONG KONG

Bank Address: BANK OF CHINA TOWER, 1 GARDEN ROAD, CENTRAL, HONG KONG

BANK CODE: 012

Account Name : FMUSER INTERNATIONAL GROUP LIMITED

Account NO. : 012-676-2-007855-0

---------------------------------------------------------------------

Second BANK INFORMATION (COMPANY ACCOUNT):

Beneficiary: Fmuser International Group Inc

Account Number: 44050158090900000337

Beneficiary's Bank: China Construction Bank Guangdong Branch

SWIFT Code: PCBCCNBJGDX

Address: NO.553 Tianhe Road, Guangzhou, Guangdong,Tianhe District, China

**Note: When you transfer money to our bank account, please DO NOT write anything in the remark area, otherwise we won't be able to receive the payment due to government policy on international trade business.

|

|

|

|

* It will be sent in 1-2 working days when payment clear.

* We will send it to your paypal address. If you want to change address, please send your correct address and phone number to my email [email protected]

* If the packages is below 2kg,we will be shipped via post airmail, it will take about 15-25days to your hand.

If the package is more than 2kg,we will ship via EMS , DHL , UPS, Fedex fast express delivery,it will take about 7~15days to your hand.

If the package more than 100kg , we will send via DHL or air freight. It will take about 3~7days to your hand.

All the packages are form China guangzhou.

* Package will be sent as a "gift" and declear as less as possible,buyer don't need to pay for "TAX".

* After ship, we will send you an E-mail and give you the tracking number.

|

|

|

For Warranty .

Contact US--->>Return the item to us--->>Receive and send another replace .

Please return to this address and write your paypal address,name,problem on note: |

|