04. Introduction to basic knowledge of live broadcast

1. Collect video and audio

*1.1 Capture video and audio coding framework *

AVFoundation: AVFoundation is a framework for playing and creating real-time audiovisual media data. It also provides an Objective-C interface to manipulate these audiovisual data, such as editing, rotating, and re-encoding

*1.2 Video and audio hardware equipment *

CCD: Image sensor: Used in the process of image acquisition and processing to convert images into electrical signals.

Pickup: Sound sensor: Used in the process of sound collection and processing, converting sound into electrical signals.

Audio sample data: generally in PCM format

Video sampling data: Generally, it is in YUV or RGB format. The volume of the original audio and video collected is very large, and it needs to be processed by compression technology to improve transmission efficiency

2. Video processing (beauty, watermark)

Video processing principle: Because the video is finally rendered on the screen through the GPU, frame by frame, we can use OpenGL ES to process the video frames, so that the video has various effects, just like a tap flowing out Water passes through several pipes and then flows to different targets

Now all kinds of beauty and video add special effects apps are implemented using the GPUImage framework.

*Video processing framework*

GPUImage: GPUImage is a powerful image/video processing framework based on OpenGL ES. It encapsulates various filters and can also write custom filters. It has built-in more than 120 common filter effects.

OpenGL: OpenGL (Open Graphics Library in full) is a specification that defines a cross-programming language, cross-platform programming interface, which is used for three-dimensional images (two-dimensional is also possible). OpenGL is a professional graphics program interface, a powerful, easy-to-call underlying graphics library.

OpenGL ES: OpenGL ES (OpenGL for Embedded Systems) is a subset of the OpenGL 3D graphics API, designed for embedded devices such as mobile phones, PDAs, and game consoles.

3. Video encoding and decoding

*3.1 Video coding framework *

FFmpeg: is a cross-platform open source video framework that can implement rich functions such as video encoding, decoding, transcoding, streaming, and playback. The supported video formats and playback protocols are very rich, including almost all audio and video codecs, encapsulation formats and playback protocols.

-Libswresample: It can perform operations such as resampling, rematrixing and converting the sampling format of the audio.

-LibavCodec: Provides a general codec framework, including many video, audio, subtitle streams and other codec/decoders.

-Libavformat: Used to encapsulate/decapsulate the video.

-Libavutil: Contains some common functions, such as random number generation, data structure, mathematical operations, etc.

-Libpostproc: used for some post-processing of the video.

-Libswscale: used for video image scaling, color space conversion, etc.

-Libavfilter: Provide filter function.

X264: YuV encoding and compressing the original video data into H.264 format

VideoToolbox: Apple's own video hard-decoding and hard-coding API, but only opened after iOS8.

audioToolbox: Apple's own audio hard decoding and hard coding API

*3.2 Video coding technology *

Video compression coding standards: coding technologies for video compression (video coding) or decompression (video decoding), such as MPEG, H.264, these video coding technologies are compression coding video

Main function: to compress the video pixel data into a video stream, thereby reducing the amount of video data. If the video is not compressed and encoded, the volume is usually very large, and a movie may require hundreds of gigabytes of space.

Note: The most influencing video quality is its video encoding data and audio encoding data, which has nothing to do with the packaging format

MPEG: A video compression method that uses inter-frame compression, only storing the differences between consecutive frames, so as to achieve a larger compression ratio

H.264/AVC: A video compression method that uses pre-prediction and the same frame prediction method as the PB frame in MPEG. It can generate a video stream suitable for network transmission according to needs, and has a higher compression ratio. Have better image quality

Note 1: If you compare the definition of a single screen, mpeg4 has an advantage; from the definition of action continuity, H.264 has an advantage

Note 2: Because the algorithm of 264 is more complex, the program is cumbersome to implement, and it needs more processor and memory resources to run it. Therefore, running 264 requires relatively high system requirements.

Note 3: Because the implementation of 264 is more flexible, it leaves some implementations to the manufacturers themselves. Although this brings many benefits to the implementation, the intercommunication between different products has become a big problem, resulting in the adoption of company A The data compiled by the encoder must be solved by the decoder of Company A to solve such embarrassing things

H.265/HEVC: A video compression method based on H.264, retaining some of the original technologies, while improving some related technologies to improve the relationship between bit stream, encoding quality, delay and algorithm complexity Relations to achieve the optimal setting.

H.265 is a more efficient coding standard, which can compress the volume of the content to a smaller size under the same image quality effect, and transmit faster and save bandwidth.

I frame: (key frame) keep a complete picture, only need the data of this frame to complete the decoding (because it contains the complete picture

P frame: (Difference frame) The difference between this frame and the previous frame is retained. When decoding, the previously buffered picture needs to be superimposed on the difference defined by this frame to generate the final picture. (P frame does not have complete picture data, only data that is different from the picture of the previous frame)

B frame: (two-way difference frame) preserves the difference between the current frame and the previous and next frames. To decode the B frame, not only the previous buffered picture must be obtained, but also the decoded picture. The final result is obtained through the superposition of the front and rear pictures and the current frame data Picture. B frame compression rate is high, but the CPU will be more tired when decoding

Intraframe compression: When compressing a frame of image, only the data of this frame is considered without considering the redundant information between adjacent frames. Generally, a lossy compression algorithm is used in the frame

InteRFrame compression: Temporal compression, which compresses data by comparing data between different frames on the time axis. Inter-frame compression is generally lossless

muxing (synthesis): Encapsulate video streams, audio streams and even subtitle streams into a file (container format (FLV, TS)) and transmit it as a signal.

*3.3 Audio coding technology *

AAC, mp3: These are audio coding technologies, used for compressed audio

*3.4 Rate control *

Multi-bitrate: The network situation the audience is in is very complicated, it may be WiFi, it may be 4G, 3G, or even 2G, so how to meet the needs of multiple parties? Build a few more lines and customize the bit rate according to the current network environment.

For example: I often see 1024, 720, HD, SD, smooth, etc. in video playback software, which refer to various bit rates.

*3.5 Video packaging format *

TS: A streaming media encapsulation format. Streaming media encapsulation has the advantage of not needing to load the index before playing, which greatly reduces the delay of the first loading. If the movie is relatively long, the index of the mp4 file is quite large, which affects the user experience

Why use TS: This is because two TS clips can be seamlessly spliced, and the player can play continuously

FLV: A streaming media encapsulation format. Due to the extremely small file size and extremely fast loading speed, it makes it possible to watch video files on the Internet. Therefore, the FLV format has become the mainstream video format today.

4. Push Stream

*4.1 Data Transmission Framework *

librtmp: used to transmit data in RTMP protocol format

*4.2 Streaming media data transmission protocol *

RTMP: Real-time messaging protocol, an open protocol developed by Adobe Systems for audio, video and data transmission between Flash players and servers. Because it is an open protocol, it can all be used.

The RTMP protocol is used for the transmission of objects, video, and audio.

This protocol is built on top of TCP protocol or polling HTTP protocol.

The RTMP protocol is like a container used to hold data packets. These data can be audiovisual data in FLV. A single connection can transmit multiple network streams through different channels, and the packets in these channels are transmitted in fixed-size packets

chunk: message packet

5. Streaming Media Server

*5.1 Commonly used servers *

SRS: An excellent open source streaming media server system developed by Chinese

BMS: It is also a streaming media server system, but not open source. It is a commercial version of SRS and has more functions than SRS

nginx: Free and open source web server, commonly used to configure streaming media servers.

*5.2 Data distribution *

CDN: (Content Delivery Network), the content delivery network, publishes the content of the website to the "edge" of the network closest to the user, so that the user can obtain the desired content nearby, solves the congestion of the Internet network, and improves the user's access to the website responding speed.

CDN: Proxy server, equivalent to an intermediary.

The working principle of CDN: such as requesting streaming media data

1. Upload streaming media data to the server (origin site)

2. The source station stores streaming media data

3. The client plays the streaming media and requests the encoded streaming media data from the CDN

4. The CDN server responds to the request. If the streaming media data does not exist on the node, it continues to request the streaming media data from the source station; if the video file is already cached on the node, skip to step 6.

5. The origin site responds to the CDN request and distributes the streaming media to the corresponding CDN node

6. The CDN sends streaming media data to the client

Back-to-origin: When a user visits a certain URL, if the parsed CDN node does not cache the response content, or the cache has expired, it will return to the origin site to get the search. If no one visits, then the CDN node will not actively go to the source site to get it.

Bandwidth: The total amount of data that can be transmitted at a fixed time,

For example, a 64-bit, 800MHz front-side bus, its data transfer rate is equal to 64bit×800MHz÷8(Byte)=6.4GB/s

Load balancing: A server set is composed of multiple servers in a symmetrical manner. Each server has an equivalent status and can provide services independently without the assistance of other servers.

Through a certain load sharing technology, the requests sent from the outside are evenly distributed to a certain server in the symmetric structure, and the server that receives the request independently responds to the client's request.

Load balancing can evenly distribute client requests to the server array, thereby providing quick access to important data and solving the problem of a large number of concurrent access services.

This cluster technology can achieve performance close to that of a mainframe with minimal investment.

QoS (bandwidth management): Limit the bandwidth of each group, so that the limited bandwidth can be used to its maximum effect

6. Pull flow

Live broadcast protocol selection:

RTMP, RTSP can be used for those with high real-time requirements or interactive needs

For those with playback or cross-platform requirements, HLS is recommended

Live broadcast protocol comparison: (5)

HLS: A protocol for real-time streaming defined by Apple. HLS is implemented based on the HTTP protocol. The transmission content includes two parts, one is the M3U8 description file, and the other is the TS media file. It can realize the live and on-demand streaming media, mainly used in the iOS system

HLS is to achieve live broadcast by on-demand technology

HLS is an adaptive bitrate streaming. The client will automatically select video streams with different bitrates according to network conditions. Use high bitrates if conditions permit, and use low bitrates when the network is busy, and automatically switch between the two at will

change. This is very helpful for ensuring smooth playback when the mobile device's network conditions are unstable.

The implementation method is that the server provides a multi-bitrate video stream, and it is noted in the list file, and the player automatically adjusts according to the playback progress and download speed.

Comparison of HLS and RTMP: HLS is mainly due to relatively large delay, and the main advantage of RTMP is low latency

The small slice method of the HLS protocol will generate a large number of files, and storing or processing these files will cause a lot of waste of resources

Compared with the SP protocol, the advantage is that once the segmentation is completed, the subsequent distribution process does not need to use any special software at all. An ordinary network server is sufficient, which greatly reduces the configuration requirements of the CDN edge server, and any ready-made CDN can be used. , And general servers rarely support RTSP.

HTTP-FLV: Streaming media content based on HTTP protocol.

Compared with RTMP, HTTP is simpler and well-known, the content delay can also be 1~3 seconds, and the opening speed is faster, because HTTP itself does not have complex state interaction. So from the perspective of latency, HTTP-FLV is better than RTMP.

RTSP: Real-time streaming protocol, defines how one-to-many applications can effectively transmit multimedia data through an IP network.

RTP: Real-time transport protocol. RTP is built on the UDP protocol and is often used together with RTCP. It does not provide on-time delivery mechanism or other quality of service (QoS) guarantees. It relies on low-level services to achieve this process.

RTCP: RTP's supporting protocol, the main function is to provide feedback for the quality of service (QoS) provided by RTP, and to collect statistical information about the media connection, such as the number of bytes transmitted, the number of packets transmitted, the number of lost packets, one-way and two-way networks Delay and so on.

7. Decoding

*7.1 Decapsulation *

Demuxing (separation): Decompose the video, audio, or subtitles from the file (container format (FLV, TS)) synthesized from the video stream, audio stream, and subtitle stream, and decode them separately.

*7.2 Audio coding framework *

fdk_aac: Audio encoding and decoding framework, PCM audio data and AAC audio data conversion

*7.3 Decoding introduction *

Hard decoding: Use GPU to decode, reduce CPU operations

Advantages: smooth playback, low power consumption, fast decoding speed,

* Disadvantages: poor compatibility

Soft decoding: Use CPU to decode

Advantages: good compatibility

* Disadvantages: increased CPU burden, increased power consumption, no hardware

Smooth decoding, relatively slow decoding speed

8. Play

ijkplayer: an open source Android/iOS video player based on FFmpeg

API is easy to integrate;

The compilation configuration can be cut to facilitate the control of the installation package size;

Support hardware acceleration decoding, more power saving

Simple and easy to use, specify the streaming URL, automatically decode and play.

9. Chat interaction

IM: (InstantMessaging) Instant messaging: is a real-time communication system that allows two or more people to use the network to communicate in real time text messages, files, voice and video.

The main role of IM in the live broadcast system is to realize the text interaction between the audience and the anchor, and between the audience and the audience.

*Third Party SDK *

Tencent Cloud: Instant messaging SDK provided by Tencent, which can be used as a live chat room

Rongyun: A commonly used instant messaging SDK that can be used as a live chat room

5. How to quickly develop a complete iOS live streaming app

1. Use third-party live streaming SDK for rapid development

Qiniu Cloud: Qiniu Live Cloud is a global live streaming service created specifically for live streaming platforms and an enterprise-level live streaming cloud service platform that implements SDK end-to-end live streaming scenarios.

* Live streaming platforms such as Panda TV and Dragon Ball TV all use Qiniu Cloud

NetEase Video Cloud: Based on professional cross-platform video codec technology and large-scale video content distribution network, it provides stable, smooth, low-latency, high-concurrency real-time audio and video services, and can seamlessly connect live video to its own App.

2. Why do third-party SDK companies provide SDKs to us?

We hope to tie our product and it to the same boat and rely more on it.

Technology makes money and helps raise a large number of programmers

3. Live broadcast function: self-research or use third-party live broadcast SDK development?

Third-party SDK development: For a start-up team, self-developed live broadcast has a large threshold in terms of technical threshold, CDN, and bandwidth, and it takes a lot of time to make a finished product, which is not conducive to investment.

Self-research: The company’s live broadcast platform is large. In the long run, self-research can save costs, and the technical aspects are much more controllable than directly using SDK.

4. Third-party SDK benefits

lower the cost

Use good third-party corporate services, you will no longer have to spend high prices to hire headhunters to dig expensive big cows, and there is no need to appease the personal temperament of big cows.

Improve efficiency

The focus of third-party services and the convenience brought by code integration may only take 1-2 hours, which saves nearly 99% of the time, which is enough in exchange for more time to fight against competitors and increase more. Great possibility of success

reduce risk

With the help of professional third-party services, due to its fast, professional, stable and other characteristics, it can greatly enhance the competitiveness of products (high-quality services, speed of research and development, etc.), and shorten the trial and error time, which will surely be one of the means to save lives in entrepreneurship.

|

|

|

|

How far(long) the transmitter cover?

The transmission range depends on many factors. The true distance is based on the antenna installing height , antenna gain, using environment like building and other obstructions , sensitivity of the receiver, antenna of the receiver . Installing antenna more high and using in the countryside , the distance will much more far.

EXAMPLE 5W FM Transmitter use in the city and hometown:

I have a USA customer use 5W fm transmitter with GP antenna in his hometown ,and he test it with a car, it cover 10km(6.21mile).

I test the 5W fm transmitter with GP antenna in my hometown ,it cover about 2km(1.24mile).

I test the 5W fm transmitter with GP antenna in Guangzhou city ,it cover about only 300meter(984ft).

Below are the approximate range of different power FM Transmitters. ( The range is diameter )

0.1W ~ 5W FM Transmitter :100M ~1KM

5W ~15W FM Ttransmitter : 1KM ~ 3KM

15W ~ 80W FM Transmitter : 3KM ~10KM

80W ~500W FM Transmitter : 10KM ~30KM

500W ~1000W FM Transmitter : 30KM ~ 50KM

1KW ~ 2KW FM Transmitter : 50KM ~100KM

2KW ~5KW FM Transmitter : 100KM ~150KM

5KW ~10KW FM Transmitter : 150KM ~200KM

How to contact us for the transmitter?

Call me +8618078869184 OR

Email me [email protected]

1.How far you want to cover in diameter ?

2.How tall of you tower ?

3.Where are you from ?

And we will give you more professional advice.

About Us

FMUSER.ORG is a system integration company focusing on RF wireless transmission / studio video audio equipment / streaming and data processing .We are providing everything from advice and consultancy through rack integration to installation, commissioning and training.

We offer FM Transmitter, Analog TV Transmitter, Digital TV transmitter, VHF UHF Transmitter, Antennas, Coaxial Cable Connectors, STL, On Air Processing, Broadcast Products for the Studio, RF Signal Monitoring, RDS Encoders, Audio Processors and Remote Site Control Units, IPTV Products, Video / Audio Encoder / Decoder, designed to meet the needs of both large international broadcast networks and small private stations alike.

Our solution has FM Radio Station / Analog TV Station / Digital TV Station / Audio Video Studio Equipment / Studio Transmitter Link / Transmitter Telemetry System / Hotel TV System / IPTV Live Broadcasting / Streaming Live Broadcast / Video Conference / CATV Broadcasting system.

We are using advanced technology products for all the systems, because we know the high reliability and high performance are so important for the system and solution . At the same time we also have to make sure our products system with a very reasonable price.

We have customers of public and commercial broadcasters, telecom operators and regulation authorities , and we also offer solution and products to many hundreds of smaller, local and community broadcasters .

FMUSER.ORG has been exporting more than 15 years and have clients all over the world. With 13 years experience in this field ,we have a professional team to solve customer's all kinds of problems. We dedicated in supplying the extremely reasonable pricing of professional products & services. Contact email : [email protected]

Our Factory

We have modernization of the factory . You are welcome to visit our factory when you come to China.

At present , there are already 1095 customers around the world visited our Guangzhou Tianhe office . If you come to China , you are welcome to visit us .

At Fair

This is our participation in 2012 Global Sources Hong Kong Electronics Fair . Customers from all over the world finally have a chance to get together.

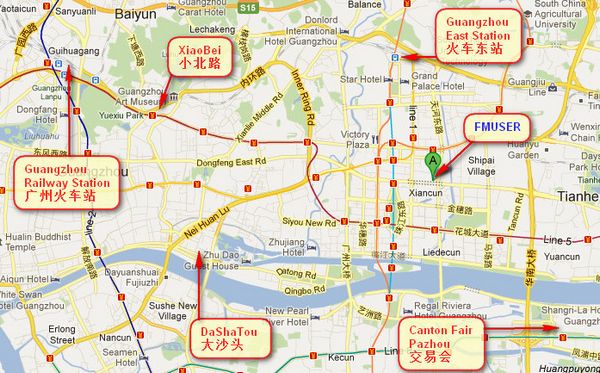

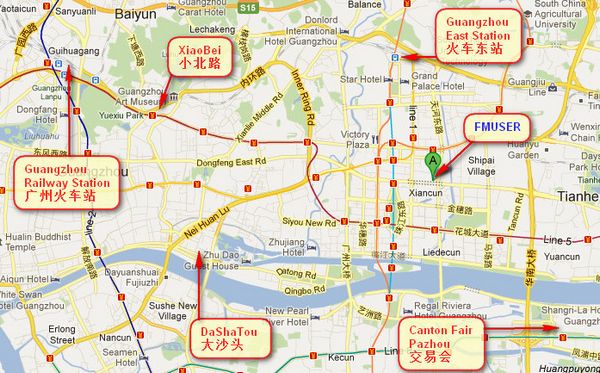

Where is Fmuser ?

You can search this numbers " 23.127460034623816,113.33224654197693 " in google map , then you can find our fmuser office .

FMUSER Guangzhou office is in Tianhe District which is the center of the Canton . Very near to the Canton Fair , guangzhou railway station, xiaobei road and dashatou , only need 10 minutes if take TAXI . Welcome friends around the world to visit and negotiate .

Contact: Sky Blue

Cellphone: +8618078869184

WhatsApp: +8618078869184

Wechat: +8618078869184

E-mail: [email protected]

QQ: 727926717

Skype: sky198710021

Address: No.305 Room HuiLan Building No.273 Huanpu Road Guangzhou China Zip:510620

|

|

|

|

English: We accept all payments , such as PayPal, Credit Card, Western Union, Alipay, Money Bookers, T/T, LC, DP, DA, OA, Payoneer, If you have any question , please contact me [email protected] or WhatsApp +8618078869184

-

PayPal.  www.paypal.com www.paypal.com

We recommend you use Paypal to buy our items ,The Paypal is a secure way to buy on internet .

Every of our item list page bottom on top have a paypal logo to pay.

Credit Card.If you do not have paypal,but you have credit card,you also can click the Yellow PayPal button to pay with your credit card.

---------------------------------------------------------------------

But if you have not a credit card and not have a paypal account or difficult to got a paypal accout ,You can use the following:

Western Union.  www.westernunion.com www.westernunion.com

Pay by Western Union to me :

First name/Given name: Yingfeng

Last name/Surname/ Family name: Zhang

Full name: Yingfeng Zhang

Country: China

City: Guangzhou

|

---------------------------------------------------------------------

T/T . Pay by T/T (wire transfer/ Telegraphic Transfer/ Bank Transfer)

First BANK INFORMATION (COMPANY ACCOUNT):

SWIFT BIC: BKCHHKHHXXX

Bank name: BANK OF CHINA (HONG KONG) LIMITED, HONG KONG

Bank Address: BANK OF CHINA TOWER, 1 GARDEN ROAD, CENTRAL, HONG KONG

BANK CODE: 012

Account Name : FMUSER INTERNATIONAL GROUP LIMITED

Account NO. : 012-676-2-007855-0

---------------------------------------------------------------------

Second BANK INFORMATION (COMPANY ACCOUNT):

Beneficiary: Fmuser International Group Inc

Account Number: 44050158090900000337

Beneficiary's Bank: China Construction Bank Guangdong Branch

SWIFT Code: PCBCCNBJGDX

Address: NO.553 Tianhe Road, Guangzhou, Guangdong,Tianhe District, China

**Note: When you transfer money to our bank account, please DO NOT write anything in the remark area, otherwise we won't be able to receive the payment due to government policy on international trade business.

|

|

|

|

* It will be sent in 1-2 working days when payment clear.

* We will send it to your paypal address. If you want to change address, please send your correct address and phone number to my email [email protected]

* If the packages is below 2kg,we will be shipped via post airmail, it will take about 15-25days to your hand.

If the package is more than 2kg,we will ship via EMS , DHL , UPS, Fedex fast express delivery,it will take about 7~15days to your hand.

If the package more than 100kg , we will send via DHL or air freight. It will take about 3~7days to your hand.

All the packages are form China guangzhou.

* Package will be sent as a "gift" and declear as less as possible,buyer don't need to pay for "TAX".

* After ship, we will send you an E-mail and give you the tracking number.

|

|

|

For Warranty .

Contact US--->>Return the item to us--->>Receive and send another replace .

Name: Liu xiaoxia

Address: 305Fang HuiLanGe HuangPuDaDaoXi 273Hao TianHeQu Guangzhou China.

ZIP:510620

Phone: +8618078869184

Please return to this address and write your paypal address,name,problem on note: |

|